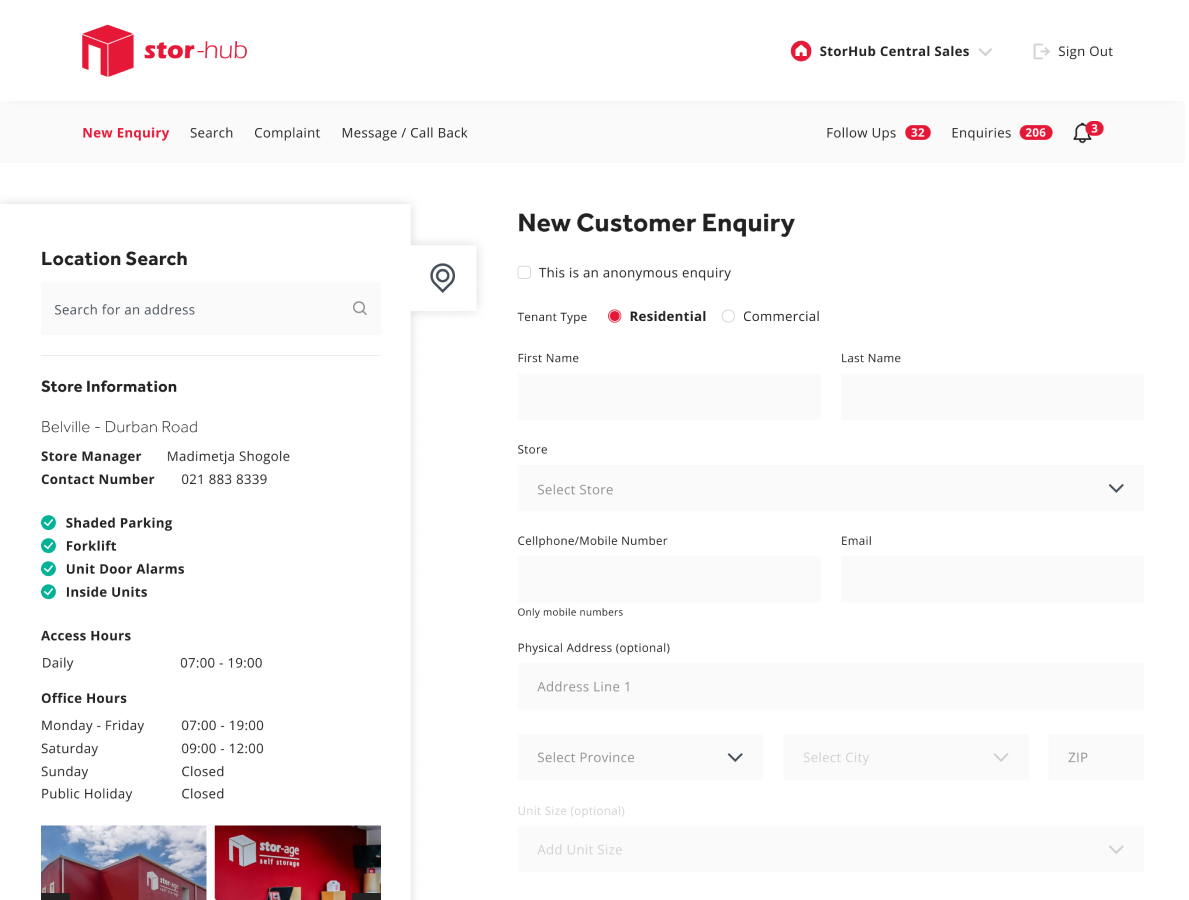

Knowledge Sharing

Insights, practical tips, and recaps from our internal learning sessions.

Key Topics & Takeaways

1. Figma Organisation

- Teams & Projects

Figma does not utilise traditional folders. Instead, you establish a team and create projects for that team. Each project contains multiple design files (or “documents”), which can be either Figma (for UI designs) or FigJam (for whiteboarding, flows, and IA diagrams). - Library Files

Each project’s “library file” contains globally reusable styles and components, such as buttons, inputs, and icons. Once published, these libraries can be imported and updated across all design files.

2. Reusable Styles & Variables

- Text and Colour Styles

You can define typographic scales, colour palettes, and layer effects. These become selectable global styles, enabling brand consistency and easy updates throughout a project. - New Local Variables

Figma’s local variables feature allows you to store reusable values (e.g., specific hex colours, spacing sizes, and boolean flags). This is particularly useful for:- Colour Modes (light, dark, and brand variations).

- Design Tokens (naming semantic tokens like Button. Bg or Spacing.Medium rather than hex codes).

- Breakpoints & Spacing (e.g., Mobile = 375px, Tablet = 768px, Desktop = 1024px).

3. Creating Components & Variants

- Components vs. Instances

- Component: A primary design element featuring default properties (e.g., a button or an input field).

- Example: A “copy” of that component used in the actual screens can have properties overridden, such as text labels, icons, or states.

- Variants & Properties

Add “States” (e.g., Default, Hover, Disabled) to a single component set. This creates a more dynamic, single source of truth. Designers and developers can switch variants or toggle properties (icon on/off, text changes, etc.) from a drop-down menu instead of maintaining separate components. - Auto Layout

Figma’s Auto Layout feature makes it easy to:- Dynamically adjust spacing or padding around content.

- Quickly build forms or lists where elements move or resize automatically.

- Ensure consistent vertical/horizontal spacing across the design.

4. Practical Demo: Building a Mini Design System

During the demo, the presenters showed the team how to:

- Set Up Basic Color & Type Variables

- Create a “Brand.Primary” color token in the library.

- Reference that token for ButtonBackground, LinkText, etc.

- If you change Brand.Primary, it propagates everywhere you’ve used it.

- Design Inputs with Multiple States

- Default, Focus, Error, and Disabled states.

- Icon toggles (show/hide icons in the same component).

- Use properties to swap icons or update text labels quickly.

- Build a Button Component

- Variants for Default vs. Hover.

- Boolean properties to turn icons on/off (icon left, icon right).

- Text property for the label, easily updated without re-editing the component’s layers.

5. Using Figma Dev Mode

- Developer Handoff

By switching to Dev Mode in Figma, developers can:- Inspect layout properties (padding, spacing) with clarity.

- See auto-generated code snippets (CSS, React, Angular).

- Use the new “Playground” feature to test each variant/state of a component.

- Access design tokens (colours, spacing, typography, etc.) as structured data for faster integration.

- Properties & Type Generation

Dev Mode can even suggest type definitions (e.g., boolean vs. string for component properties) to accelerate front-end coding.

Why It Matters

- Scalability & Consistency

A well-structured design system ensures that as new screens, brands, or features are added, everything remains visually cohesive and easier to update. - Faster Iterations

When designers and developers share the same “source of truth” for fonts, colours, and component variants, miscommunication and repetitive work are reduced. - Easier Collaboration

Figma’s real-time collaboration features (multiple cursors, chat overlays, etc.) and Dev Mode’s “Playground” enable entire teams to contribute without stepping on each other’s toes. - Future-Proofing

By embracing Figma variables and design tokens, teams are better prepared to scale up or pivot to multiple theme modes, such as light/dark or multi-brand theming.

Final thoughts

This session showed the power of Figma’s component-based design approach for large-scale, multi-brand projects. By leveraging local variables, auto layout, robust library files, and new Dev Mode handoff features, teams can maintain a consistent UI design, reduce design debt, and speed up collaboration with developers. As Figma continues to refine its tools, especially around dev handoff, these best practices will help teams stay at the forefront of modern design workflows.

This session provided an in-depth look at organising Figma in a way that scales across multiple teams, clients, and projects.

1. Roles, Permissions, and Billing

Figma uses four main role types: Owner/Admin, Editor, Viewer, and Guest, with different levels of permissions:

- Owner / Admin

- Can manage the organisation/team settings and approve or deny requests for plugin use or new Editors.

- Can lock default seat types to avoid unexpectedly paying for new Editors.

- Responsible for controlling external sharing, domain restrictions, and plugin approvals.

- Editor

- You can edit and create files, comment, and invite new members (so you can oversee your billing settings).

- Incurs a paid seat immediately if assigned this role on an Organisation plan.

- Viewer

- You can view and comment on files, but not edit them.

- It doesn’t require a paid seat unless you upgrade.

- Guest

- Typically used for external clients or quick feedback sessions. Limited actions.

Key Billing Tips

- Default Seat Type: Always set it to “Viewer Restricted” to prevent automatic Editor upgrades.

- Upgrade Requests: Turn on daily or weekly notifications to see if anyone requests an Editor seat.

- True-up Periods: Figma bills for any seat changes (e.g., new Editors) on a set schedule. Use the cleanup window before the billing date to remove or downgrade temporary editors.

2. Team and Project Structure

Rather than a standard folder or file system, Figma organises top-level “Teams”. Within each Team, you have Projects and multiple Files within each Project. The recommended approach:

- Teams

- Often mapped 1:1 with clients (in an agency) or major product groups (in a product company).

- All team members can see the listed Projects, but only certain people (Editors) can modify files.

- Projects

- Each Project represents a significant feature area, product subteam, or separate statement of work.

- Example: In a banking client’s Team, you might have Projects for “Onboarding Flow”, “Secure Dashboard”, “Wireframes”, and “Archived Production Work”.

- Files

- Every Project can have multiple Figma files (UI designs) and/or FigJam files (whiteboarding and user flows).

- A typical Project might include:

- Library File (published styles or components)

- Main Design File (source of truth for final UI)

- Exploration / Wireframes File (early sketches or experiments)

- Research FigJam (user flows, sticky notes, and journey maps)

3. Organising Files and Pages

It’s recommended that each Figma file be organised as follows:

- Pages per Feature or Flow

- You can keep your file tidy by dedicating separate pages for each distinct flow or section (e.g., “Dashboard,” “Reports,” or “Profile).

- Use divider lines (three dashes in a page name) to visually separate sets of pages.

- Status Tags

- Mark pages or frames as “Ready for Dev”, “In Progress”, or “Archived” so that designers and developers know what’s current.

- Cover Pages & Thumbnails

- Maintain consistent cover designs with clear labels, such as “In Progress,” “Ready for Client,” or “Production.”

- You can store these cover templates in a shared library and quickly drag them to any new file.

- Version History / Snapshots

- Use “Save to Version History” with meaningful labels to create snapshots.

- This approach keeps the active file clean (you can remove old pages) while preserving older explorations for reference.

4. Libraries & Components

- Global Library

- A single library that defines your design tokens, colour palettes, typography, spacing, icons, and reusable components (buttons, inputs, and navigation).

- Team members can attach or detach it from individual Projects as needed.

- Naming Conventions

- Use consistent naming for colours and styles (e.g., Brand/Primary, Text/Body/16/Regular).

- Stick to semantic naming for tokens rather than arbitrary colour names (e.g., “brand-primary”, not “navy-blue”).

- Component Sets and Variants

- Build robust, property-based components (e.g., a Button with states for “Default”, “Hover”, “Disabled”, and optional icons).

- Ensures a single source of truth and easy overrides for specific needs.

5. Dev Mode and Handoff

Figma’s Dev Mode allows developers to:

- Explore Components in a Playground

- Each master component with well-defined variants or properties becomes testable in a playground panel.

- Devs can see how to switch states, toggle icons, and change copy, mirroring what they’ll implement in the code.

- Compare Changes

- If a design is updated after being marked “Ready for Dev,” you can add a note describing the change. This enables developers to compare old and new versions side-by-side.

- VS Code Integration & Code Snippets

- Dev Mode can generate partial React/Angular code plus CSS/variables.

- While imperfect, it speeds up basic handoff and helps developers to see the correct tokens.

6. Admin Settings & Plugin Approval

For teams handling sensitive data or under NDA:

- Domain Restrictions

- Allow links to be viewed externally only if necessary. Turn link access off if you need to keep work strictly internal.

- Plugin / Widget Approval

- Only Admins can allow plugins; be wary of third-party plugins that access your file data.

- Evaluate plugin dependencies carefully so your design system doesn’t break if a plugin is deprecated.

- Controlled Font Uploads

- Upload brand or client-specific fonts at the Organisation level.

- Ensure you have the proper font licensing.

7. Putting It All Together

A streamlined and well-governed Figma environment saves time, reduces confusion, and ensures a smoother design-to-development pipeline.

- Plan Your Structure: Create Teams for each client or product group, set up Projects for each sub-feature or deliverable, and label and separate your files neatly by purpose.

- Lock Down Billing: Keep default roles on Viewer Restricted. Monitor seat upgrade requests. Remove or downgrade temporary Editors before the billing “true-up” if they’re no longer active.

- Develop a Library System: Publish a central design library (colours, typography, and components). Encourage designers to use semantic tokens, clear naming, and thorough variant setups.

- Use Dev Mode Wisely: Mark frames or pages as “Ready for Dev,” detail any changes, and let developers track differences. Provide robust component properties so developers can see precisely how states and variations work.

- Maintain Quality: Conduct regular design reviews to remove unused explorations, update file pages, and ensure no one stores crucial designs outside the official library or trunk.

Ultimately, Figma’s organisational system and Dev Mode can be powerful enablers of a smooth, well-structured design process – but only if you invest in consistent naming, disciplined library management, and careful user-seat administration.

It takes time to learn any platform, and Azure is no different. Azure is generally speaking quite easy to use, belying the complexity and hidden pitfalls one might encounter when provisioning and managing resources.

In this article, I will try to give some practical advice from what I have learned during our NML Azure journey.

Find your happy place

The first thing that will save you loads of pain and frustration is to choose a region and stick to it. When provisioning a resource you can select the region you where you want the resource to be hosted. There is no "default", and so at times, the default selected value in the region dropdown will be different from what you previously selected. It has often happened that we have had, by accident, resources and resource groups in widely different regions.

The choice of the Azure region you want to target will depend on your precise criteria, but generally, you will want to choose the region that gives you the least amount of network latency. For South Africa, that used to be West Europe or North Europe. You also now have West UK and South UK with comparable latencies to the prior regions. However, at the time of writing, Northern South Africa is generally available, with most of the Azure resources one needs for a common software system. Southern South Africa is running and used for regional replication and backups, but not generally available yet.

Birds of a feather

NML is a services company and we host our development, testing, user acceptance criteria, and production environments on behalf of our clients on Azure. Over time we learned that provisioning a dedicated subscription for a client or project, and then grouping resources by environment in the subscription works best.

By dedicating a subscription per project or client you make billing much easier, as Azure is able to give you cost per subscription at a glance. It will also help with managing costs as you can setup cost alerts and budget on a subscription.

Grouping resources by a target environment is essential to configuring secure infrastructure for a project. Probably the most important and helpful tip here is to find a naming convention and stick to it. It will make your maintenance much more bearable.

At NML, we use the following convention: <PROJECT_ACCRONYM>-<ENVIRONMENT>. For a project, say AdventureWorks, we would have the following resource groups:

- advwrks-dev

- advwrks-qa

- advwrks-uat

- advwrks-prod

- advwrks-shared

Azure costs can add up quickly if one is not careful, and so at NML we generally have a shared resource group for our development and testing environments with resources that can be shared and reused. Production is always isolated to ensure a good security posture. The resource types that are most responsible for ballooning costs and that can be shared readily are:

- App Service Plans

- SQL Server

- Cosmos DB

- Redis

- ServiceBus & EventGrid

- Storage Accounts

- Virtual Machines

RBAC properly

Role Based Access Control (or RBAC) is the heart and soul of resource security on Azure. When hosting a solution for a client, you need to ensure that you protect their interests as best you can. With RBAC you can go along way towards ensuring that goal.

RBAC works by assigning users one or more roles on any particular resource group or subscription. Each role has a strictly defined set of allowed operations that can be performed, and any action that falls outside will be denied.

The most import piece of advice here is to assign access via Azure AD security groups. Assigning roles individually quickly turns into a management nightmare. By leveraging on user security groups, you can easily swap and change user access by only having to update the security groups they belong to. You can then assign the relevant security groups on a resource group level to the roles that are needed. As far as possible, do not assign subscription wide roles to any user or group. That is because, at the time of writing, Azure does not have deny rights configuration. Roles added on a subscription level will, therefore, apply to all resource groups and resources on and inheritance basis.

We generally make a distinction between development users and production users and will, therefore, have at least two sets of security groups. The groups are named for the project or client and the role that they will be assigned to. For example, for the Contributor role on the AdventureWorks project, we will have the following security groups:

- advwrks-contributors

- advwrks-prod-contributors

There will be similar security groups for each role to be assigned. Examples of roles we generally have are:

- *-contributor

- *-key-vault-contributor

- *-key-vault-reader

- *-web-contributor

- *-monitoring-contributor

Using this scheme allows us to configure developer access to the development environments and to have restricted access to production environments and only temporarily assign access where and when needed.

The help you need

Azure has some built-in features, some for pay, that is essential for running deployments successfully on the platform.

Security Center

Security center helps in identifying and rectifying potential security risks to your resources. It will identify various platform configuration issues that you can improve, for example, configuring firewalls on key resources like Azure SQL Server, Key Vault, etc. It will also identify configuration on virtual machines that need attention.

Review Security Center recommendations frequently. Not everything is practical or applicable, but most of it is gold.

Advisor

Advisor is there to help to get the most out of your resources. It checks and recommends improvements on the following:

- High Availability

- Security

- Performance

- Operational excellence

- Cost

Keep checking back in with Advisor, as it will highlight areas in your Azure ecosystem that need attention.

Cost Management + Billing

Here you can, at a glance, see your current monetary exposure over your all Azure resources. You should configure budgets and alerts on subscriptions and/or resource groups. It is a rather unpleasant feeling to receive a bill at the end of the month that is hugely inflated because of a development mistake or infrastructure configuration issue that could have been prevented with a timeous alert.

General

App Service Plans

Be careful of racking up a slew of underutilized App Service Plans. They are mostly used for Web Apps, Logic Apps, and Functions, and should be shared. Some features are only available on higher-priced tiers, and over time you might realize you are paying more than is necessary because App Service Plans had been scaled up to unlock some needed features.

Cosmos DB

Cosmos DB is a wonderful piece of technology, but its costing is a little like black magic. It is not priced by what you use, but rather by what you think you are going to use (reserved resource model). Be conservative when you configure Cosmos DB, and scale up once you have data indicating that you need to reserve more resources.

Naming conventions

Azure has a wonderful search feature to find resources quickly. A standard, practical naming convention across all resources (not just resource groups) will save you oodles of time. It is much better to have an advwrks-dev-frontend web app in the advwrks-dev resource group, than to just have frontend in there. Make sure everybody that has permissions to create resources follows the scheme as closely as practically possible.

Conclusion

Azure is a tremendously useful and pleasant platform to work on. However, one can safeguard your experience (and wallet!) by implementing and following some basic guidelines like that described above.

The difference between a Monolithic and Microservice Architecture

Consider a grand piano, where a broken string can render the entire instrument out of tune. This is like a monolithic architecture, unified but overall vulnerable to a single flaw. Now imagine an orchestra, composed of many musicians; if one instrument fails, the music plays on with minimal disruption. This is much like a microservice architecture, composed of autonomous, adaptable parts that make the overall system more resilient.

Advantages of Monolithic Architecture

- Simpler Development: It's less complex to develop since all components exist together.

- Easier Testing: Testing is straightforward as there’s only one piece to test.

- Efficient Communication: Components communicate efficiently due to tight coupling.

- Simple Deployment: The entire codebase is deployed as one release.

- Shared Memory Access: All components share the same memory, making it more efficient.

Disadvantages of Monolithic Architecture

- Difficult Maintenance: Upkeep is tough because all components are interlinked.

- Limited Scalability: Scaling is challenging since you need to scale the entire system, not just parts.

- Slow Deployment: Updating or adding new features means redeploying the entire application.

- Hard Fault Isolation: Isolating faults is difficult because if one part fails, it can bring down the whole system.

- Changes Affect Entire System: Any modification can impact the entire system, potentially leading to unexpected issues.

Advantages of Microservice Architecture

- Accelerated Scalability: Deploying services across multiple servers can mitigate the performance impact of individual components.

- Improved Fault Isolation: If one service fails, it’s easier to identify and fix the issue without affecting the entire system.

- Enhanced Productivity: Focus on one small piece of the system at a time.

- Quick Development & Deployment: Only small bits of code are deployed, reducing deployment time.

- Cost-Effective: Localizing services reduces overall development and maintenance costs.

Disadvantages of Microservice Architecture

- Increased Complexity: Developers need to write extra code for smooth communication between modules.

- Deployment & Versioning Challenges: Coordinating deployments and managing versions across multiple services can be complex.

- Testing Complexity: Requires setup to test microservices, though containerization (e.g., using Docker) can mitigate this.

- Data Management: Managing data consistency and transactions across multiple services can be complex.

Real-World Application

Example of Monolithic System In a monolithic system, a user accesses the system through a web application that interacts with an API and a database. This setup creates a single point of failure; if the API is down, the entire system is down.

Example of Microservice System In a microservice system, the application is broken into multiple services, each with its own database. If one service fails, the rest of the application can still function, eliminating single points of failure and enhancing system robustness.

Our Current System

Currently, our system is a hybrid between monolithic and microservice architecture. We have multiple front-end applications but a monolithic API. This means if the API goes down, all applications go down. Extending our legacy code is challenging and often results in breaking other systems.

Proposed Microservice Design

We are planning to transition to a microservice architecture. This involves splitting the current monolithic API into smaller, independent services. Each service will have its own database and communicate with others as needed. This design aims to improve fault isolation and scalability while reducing single points of failure.

Implementation Strategy

We plan to use the Strangler Fig Pattern for transitioning. This involves incrementally migrating from the legacy system to the new system by building new microservices alongside the existing system and gradually phasing out the old code.

Handling Service-to-Service Calls

We are exploring Dapr (Distributed Application Runtime) to handle service-to-service communication and other microservice challenges. Dapr acts as a sidecar to your application, simplifying the development of scalable microservices by managing infrastructure concerns. Stay tuned as we continue to share our experiences and insights on this journey of transition over our architecture!

Exploring Astro: A Fast and Flexible Framework for Building Content-Rich Websites

In one of our recent knowledge-sharing sessions we dove into Astro, an innovative framework designed to help you build fast, content-focused websites. If you're new to Astro, head over to their official site, Astro.build, to get started. In this post, we'll explore what Astro is, its key features, and a quick demo to showcase its capabilities.

What is Astro?

Astro is an all-in-one web framework aimed at creating fast, content-focused websites. Here are some key highlights:

- Content-Focused: Ideal for content-rich websites.

- Server-First: HTML is rendered on the server for faster load times.

- Zero JS by Default: No unnecessary JavaScript runtime overhead.

- Edge Ready: Deploy anywhere, including global edge runtimes like Deno or Cloudflare.

- Customizable: Over 100 integrations available.

- UI Agnostic: Supports various frameworks like React, Preact, Svelte, Vue, and more.

Component Island Architecture

Astro’s key feature is the component island architecture. This allows interactive UI components to exist on an otherwise static HTML page, providing a significant performance boost.

- Server-First API Design: Moves hydration off user devices.

- Interactive Islands: Each component island renders in isolation, making the page fast and efficient.

- Partial Hydration: Only the necessary components are hydrated, leaving the rest as static HTML.

Creating an Astro Project

Creating an Astro project is straightforward. You can start by running `yarn create astro`, and follow the prompts to set up your project. Astro provides several templates to kickstart your development, including a personal website template with pre-built components and best practices.

Project Structure

An Astro project typically includes:

- src: Source files, including components and pages.

- public: Static assets like images and fonts.

- astro.config.mjs: Configuration settings for Astro.

- package.json: Project dependencies and scripts.

Building with Astro

Astro components are the building blocks of your project. Each component consists of:

- Component Script: JavaScript code that runs on the server.

- Component Template: HTML that defines the component structure.

For example, a simple Astro component might look like this:

---

// JavaScript code

const name = "Astro";

---

<!DOCTYPE html>

<html>

<head>

<title>{name} Demo</title>

</head>

<body>

<h1>Welcome to {name}</h1>

</body>

</html>

Integrating UI Frameworks

Astro supports integrating various UI frameworks. For example, to use React, you simply add the @astrojs/react integration:

yarn add @astrojs/react

Then, you can import and use React components within your Astro project.

Fetching Data

Astro supports server-side data fetching using the global fetch function. This allows you to fetch data at build time and render it as static HTML.

---

const res = await fetch('<https://rickandmortyapi.com/api/character>');

const data = await res.json();

---

<html>

<body>

{data.results.map(character => (

<div>

<h2>{character.name}</h2>

<p>{character.status}</p>

<p>{character.species}</p>

</div>

))}

</body>

</html>

Adding Styles

Astro supports various styling options, including CSS, CSS modules, and even preprocessors like Sass. You can scope styles to specific components or apply global styles as needed.

Demo Time

In our demo, we created a simple Astro project and explored how to:

- Set up a project structure.

- Create and import components.

- Fetch data from an API.

- Apply styles using CSS and CSS modules.

- Integrate with React for dynamic components.

Conclusion

Astro is a powerful framework for building fast, content-rich websites with ease. Its component island architecture and server-first approach make it a standout choice for modern web development. Whether you're building a personal blog, a static site, or a complex web application, Astro provides the tools and flexibility to get the job done efficiently.

We hope this session has given you a good introduction to Astro and its capabilities. Feel free to explore further and start building your next project with Astro!

.webp)

Any sufficiently advanced technology is indistinguishable from magic.

The best-known and most widely cited of Arthur C Clarke’s three laws.

Another axiom relevant to the world of software development and AI is this:

Bullshit in, bullshit out.

Those two truisms should be given in an AI-first world. So, what will help a software development company like NML differentiate itself from its peers in the coming years?

Let me give you some of my thoughts and thus introduce an AI-first series of posts I’d like to publish.

Sixteen years ago, I launched the software development house NML. In 2016, NML birthed Atura, an AI-first client service chatbot. Doing so thrust me into the thick of the rapidly evolving world of AI, particularly robotic process automation and natural language processing, well before AI was a hot topic in public discourse.

The advent of AI-driven code generation is creating a major shift in software development. It is forcing my team at NML and me to rethink the skillset we need. Over the next three years, we will disrupt ourselves from the inside out, leveraging the accelerated learnings gained through Atura.

Do more with less.

The CTOs and CIOs in our client base are already asking for this: essentially higher sprint velocities using AI-driven code. What we cannot bypass if we want to avoid producing bullshit and instead create magic is the human interaction required to refine and implement AI-generated code.

Interacting with LLMs and using retrieval-augmented generation (RAG) off domain-specific documents will become a basic, commoditised task. NML and Atura’s differentiators, I believe, will be the ability to build client-specific machine-learning models, refine autogenerated code, and trigger complex and dynamic language flows that interact with operational systems—that is, don’t just retrieve basic textual answers.

For these reasons, my last two hires have been actuaries with little understanding of designing and writing commercial code. They’re learning fast with a little help from their AI friends.

These are interesting times, and adapting to a changing landscape is becoming more critical than growth under rapidly ageing business models and structures. I’m enjoying the change.

Follow me as I go deeper into how we are rethinking our software business at NML in my upcoming posts.

In 2012 I gave my first talk on typography on the web. Titled Web Typography Now it documented the evolution of type on the web and was timely as universal @font-face support had just landed in all the major browsers. At the same time Open Type features were gaining support via the lower level CSS properties. It was an exciting time for type enthusiasts as we saw a brave new browser world not shackled to the six or so web safe fonts.

Of course our new found type abilities came with drawbacks. Older versions of Internet Explorer needed a proprietary format. As did early versions of iOS. Performance was a major issue due to browser handling of font downloads and CSS parsing. More often than not we were faced with the horrors of Flash Of Invisble Text (FOIT), rendering the page unreadable until the font file finished downloading.

Over the years I have updated my typography talk. It has been presented a number of times in various formats. At meetups (DevUg, IO), at conferences (DevConf 2019, UX Craft South Africa) and in a series of workshops for CTFEDs.

Recently I presented the latest version at a FEDSA meetup. Titled Dynamic and Performant Web Typography it covers tips for selecting and matching typefaces, the latest techniques for optimising custom font performance, and how to use modern CSS for fluid type that scales seamlessly across devices.

As we now seem to do everything via Zoom (or similar tools), the talk was recorded and you can watch it on the FEDSA YouTube Channel.

One thing that came up in the discussion was around a list of resources on web type. I am currently putting that together in a GitHub repo and will update this post when it's ready.

As a company, NML organically grew over time from a small group of talented developers working on one or two projects, to a company with 60+ people with complementary skills working on multiple projects over long-term contracts. Organic growth is necessary from such humble beginnings, but it tends to breed bad habits and can blind you to flaws in processes and technologies.

Of course, bad Software Development Life Cycle (SDLC) from organic growth is by no means an inevitable consequence and companies may find that they have issues only in their processes or their technology stack. In our case, however, it turned out to be both!

Organic SDLC

We have always endeavored to be an agile process company, and we have implemented SCRUM concepts and at times Kanban on our projects. Of course, "agile" being a nearly meaningless buzzword these days, and SCRUM being, at best, very poorly implemented industry-wide, our results varied wildly from project to project.

Our SDLC at about a year ago was roughly some variation of the following:

[EDIT: To be sure, the following describes only some worst-case scenarios, and is not a reflection of the status quo across even most of the projects at NML at the time.]

- Requirements gathering and analysis

- Planning and story breakdown

- Development

- Testing

- Bug fixing

- Deployment

- Chaos

- Hotfixes

- Testing

- Start at 7, or if lucky, proceed to 11

- Retrospective bemoaning insufficient or inaccurate requirements, too little time, not enough testing, not enough visibility on work and progress, etc, etc

- Start at 1

Looking at the list, one can almost choose any number at random, and find problems within that area. Which is exactly what I started doing when it became part of my responsibilities at NML.

In short, we had the following key issues (by no means a complete list):

No process template

Agile is strong on independent, self-organizing teams, but throughout IT, it seems we interpret that as "figure out what works and just go with it". NML interpreted this no better at times, and we had as many processes as we had projects.

Personally, I dislike processes and structures, as more often than not, they seem arbitrary and aimed at constraining instead of guiding. A guideline is there to help you along a path and sanity check your progress as you move along, without dictating every step and decision. As such, it should be able to serve in more than just a singular context and help problem-solve exceptions.

I have learned that if processes and structures are developed collaboratively, and not dictated, they tend to serve as better understood guidelines. I think one of the main reasons for this is that in order to be collaborative, you need to place good communication high on the list. Goodness knows I have missed that key aspect a couple of times this past year!

Insufficient clarity

Working software over comprehensive documentation

The quote above is from the Agile manifesto. I am a fan, really, I am. This particular line, however, has been abused to within an inch of its life, and it generally has come to mean no documentation.

I actually am a huge proponent of super lightweight requirements gathering and little documentation. That is not the same as superficial requirements gathering and no documentation though!

The key to successfully minimize heavy requirements gathering and reams of documentation lie within another line from the Agile manifesto:

Customer collaboration over contract negotiation

It is imperative to have excellent customer collaboration. I feel that "contract negotiation" here includes requirements gathering, and ensuring that customers' invested involvement is the only way to have any chance of achieving working software over comprehensive documentation. Poor team cohesion

If you ask most developers what the make up an average-sized agile development team is, invariably the first role listed will be "developer". Now I have not done this exercise, so the following is purely based on my experience as both a developer, a team lead, a project manager and a CTO, but if you ask a tester, or PM the same question, they will give the same answer.

A huge shortcoming at NML was the fact that development teams were developer-centric. Of course, nothing can get developed without a developer's involvement, but I think one can argue that no effective development can occur without at least a project manager (replace scrum master and product owner if you will), developers, testers, and the customer.

It is not good enough to just develop software. The goal should be to develop software effectively. This spans all disciplines within a team, from effective team management to coding and most definitely also testing.

Without excellent cohesion between these disciplines, being effective becomes nearly impossible.

Stale tooling

The argument could be made that the technology stack and tooling one uses for driving your SDLC is not important for the success of delivering projects, and perhaps it is not. But I think it would be a grave mistake to underestimate the value add of using the right tools for your specific circumstances.

At NML, we have always used Atlassian JIRA. It is a very comprehensive project delivery tool with great community support and loads of useful third-party applications. However, in our context, it was completely counterproductive to our efforts. It was not the right tool for us.

Another example was our testing automation efforts with Katalon studios. In this case, however, there is nothing wrong with the tool, but we were addressing the wrong problems. We opted for tooling over understanding our actual shortcomings. And now? What now?

Here are how we addressed the above concerns:

- We aligned our project SDLC across the board. We still do not have a "document" that lays down the law, but we collaborated across teams to identify the aspects that work, the aspects that do not, and the aspect that cannot change. We now have a mostly identifiable and familiar process across all our teams.

- We have always been strong on customer collaboration. One of our strengths is that the responsibility for gathering and understanding customer requirements lies with technical team leads and project managers. They form a unit that has both the technical insight and communication know-how to work with the customer and the team. So the major ticket for us to fix with regards to clarity was to specify a minimum set of standards teams should comply with to be able to effectively address requirements. Decent acceptance criteria is a must.

- Our testers are often multi-project team members, and we fell into the habit of excluding them from project planning. The result was that there was very little understanding between the developers and the testers. We addressed this by pulling testers back into planning and making them more integral to the SDLC. The team must identify and add tasks for the tester, which will result in one or more test cases. It improved our overall project delivery visibility and forced us to look at our testing efforts in a whole new light.

- We adopted Azure DevOps. We are a Microsoft technology stack company, and most of our projects end up in the Azure ecosystem. Our project SDLC is much more efficient as we do not need to hop between different tools to push various steps of our SDLC along. Just the efficiency of not having to log into 3 different systems with different credentials is saving us oodles of time and frustration.

Conclusion

We are nowhere near the end of a journey, which to be honest, should have no end.

I can without a shadow of a doubt say that we are in a much better position than a year ago, and I feel that the teams themselves are happier with how they work currently.

We continue to improve and look out for more opportunities to become more effective. From time to time some old habits creep up but along with the amazing people at NML, I look out for these and re-evaluate our processes again.

The only unfortunate thing about Microsoft Azure DevOps is that they chose to use "DevOps" in the name, the latest and greatest buzzword right now. The rest of the news on Azure DevOps is almost exclusively good.

I decided around March of 2018 to move NML from our existing development cloud solutions to Azure DevOps. It has turned out to be one of the most impactful and positive changes we have made this year, and I hope to shine some light on why in this article.

The beginnings

NML has always relied heavily on cloud platforms for most of our software needs. It is a cost-effective way to operate a small to medium business, especially in the software industry. At the beginning of 2019, the cloud services we used looked as follows:

- Atlassian Jira

- Atlassian Jira ServiceDesk

- Atlassian Jira Confluence

- Atlassian Bitbucket

- Octopus Deploy

- Microsoft Office 365

- Microsoft Azure

NML is almost exclusively a Microsoft stack service provider, but we embarked on our cloud journey long before Microsoft had an offering like Azure DevOps in place. As such, we developed our business along the Atlassian line of cloud products.

Why move

The Atlassian suite served us well enough, but it would be a bald-faced lie to say I am a fan of any of the Atlassian cloud platforms. Octopus Deploy was a much later addition as our builds and releases were generally done on Jetbrains TeamCity.

Administration

My biggest grievance with the Atlassian suite of products is that they are just too complicated to manage. You almost need a doctorate just to open the administration sections. Take note of the plural: "sections"! With enough time and anti-depressants, one could usually eventually navigate your way to what you wanted, but you have to set aside at least 2 days to get anything done. And you have to go through that every time since there is virtually no way you can remember what you did previously over a length of time.

Cost

Each platform requires you to have different licenses, which can make it quite an expensive proposition. That is to say, you have to pay for Jira and ServiceDesk and Confluence and Bitbucket and TeamCity and Octopus Deploy all separately!

Complexity

You have wildly different user experiences when working across all these different systems. It is a rather huge barrier to entry for newcomers. You have to have different introduction sessions to cover each and the poor soul that has to keep up with each platform's particular nuances had no chance to get it right.

Identity

Identity management is a complete nightmare. In an age where there is a potentially fatal security incident around every corner, identity management is critical. Each user on each platform needs a different account. In fairness, Atlassian does have a single identity across its platforms, which helps some. But even there you have different user experiences, between Bitbucket and the Jira suite for instance.

Effectiveness

Lastly, it is a huge drain on effectiveness to hop between platforms while carrying out one's duties. As a developer, you need to view your story and tasks on Jira. Additional info might be in Confluence. You have to pull your source code and view pull requests on Bitbucket. Builds on TeamCity and deployments on Octopus deploy. Every time you have to switch to a different system, there is a certain level of context switching that goes along with it. I have yet to experience positive context switching in my career.

The new state

Our current state of affairs looks as follows:

- Microsoft Azure DevOps

- Atlassian ServiceDesk

- Microsoft Office 365

- Microsoft Azure

As you can see, it is a much shorter list and we are without a doubt better off on each of the pain points.

Administration

DevOps is much, much easier to configure than Atlassian. It is not nearly as configurable, though. That said, I have not missed a single one of those extra configuration options.

Somehow, Azure DevOps has the balance just right, and my experience is that when I am looking for a configuration to achieve something the way Jira does it, it is more as a result of my ignorance on how DevOps approaches the problem.

Cost

Azure DevOps has a pretty simple licensing model and it will suffice to say here that we are paying tens of thousands of Rands less per month. There is not even a comparison to the cost differential. The simple reason is that we only need to pay one license for all the features we need.

There are additional costs one needs to be aware of, for instance reserving pipeline hosts, but that is more than covered by the savings on TeamCity and VM licensing alone.

Complexity

Our ecosystem has reduced by 3 platforms. Almost all the work a newcomer will do upon joining will be exclusively limited to Azure DevOps. We have therefore removed a huge hurdle to team integration for new joiners.

Azure DevOps offers a single user experience to

- Manage sprints, stories, and tasks

- Manage repositories and pull requests

- Configure builds and releases

- Manage quality assurance

Identity

The remaining cloud services, except for Jira ServiceDesk, use Microsoft identities associated with NML. In other words, you only need to log in once. Additionally, we can enforce best security practices like multi-factor authentication, role-based access control (RBAC), etc across the whole business on our most critical platforms. It is incredibly useful to be able to manage all user access to your various platforms from a single place, Azure Active Directory.

Effectiveness

This is only anecdotal, but I strongly believe our teams' effectiveness has improved. We now have seamless integration and tracking from stories to commits to releases. Also, nobody on the team needs to hop between various platforms or interfaces to perform their work from start to finish.

More

The above items are just the pain points Azure DevOps helped us with. Here are some more wonderful features that make it worthwhile:

Incredible build and release system

The builds and release in Azure DevOps it super easy to configure and maintain. They have extensive support for various build types and release platforms. For example, one of our most used builds pipelines relies on Jekyll, a distinctly non-Microsoft solution. We also generate beautiful release notes by leveraging the integrated nature of stories and commits.

Quality Assurance

Testers and the testing effort is part of the project delivery with Azure DevOps. It has been a complete life-saver for us in establishing a working test regime that can verifiably assure quality delivery.

Pull Requests

The pull request system is sublime. You can automatically run CI builds and require it passes for new pull requests, require a minimum number of approvers, require a work item to justify the pull request, etc, all as part of a repository branch policy.

Conclusion

Our experience on Azure DevOps has been overwhelmingly positive. I put this down in large to the fact that we are a Microsoft stack company and are already comfortable with and pretty well embedded in the Microsoft scene. However, I think Azure DevOps has a much broader reach than a Microsoft context, and can very easily work for any technology stack.

I 100% accept that what I am about to say in this post will find dissent in the programming community. That is a good thing as any difference of opinion opens dialog which leads to new information and ideas, and ultimately growth. I encourage people to disagree with me at NML, although as any of the developers will tell you, you need to be prepared to defend your position.

Here is the crux of it:

As a developer, if you do not have unit tests to validate your work, you have failed at your job, even if the resulting implementation works flawlessly.

Not just a good idea

My position on this is simple. If a developer tells me that they are done with their implementation, and the answer to "Do you have unit tests?" is "No", then that developer has failed to perform the function that we have hired him/her for.

Unit testing is not just a good idea when appropriate. Delivering a feature without unit testing is delivering less than half of the feature.

I continuously have to drive this point home with our developers (and project managers) and truth be told, I am not sure why. I find it obvious that if I am implementing a required behavior, knowing whether what I implemented works, and being able to let others independently reaffirm that, is well worth the effort.

Speed is important

A constant objection to unit testing is that it takes too much time and that there is too much pressure to get a feature out. Writing good unit tests can take as much time if not more, as writing the feature itself, so when the pressure is on it should be acceptable to skip unit tests.

It is a nonsense objection because it entirely ignores the resulting development life cycle of the feature. There are two reasons why it is nonsense.

Going faster by going slow

My own experience on this is that if I spend the time implementing tests that prove the expected behavior of my code, I rarely have to go back to it. When I do not spend time on writing unit tests, I invariably end up having to return to the implementation over at least a number of cycles.

Considering that any returned work breaks the flow of what you are busy with and that it takes away the time of other people on the team, like testers, not implementing unit tests arguably slows down development for any feature.

Bad estimation practices

It can only true that writing unit tests takes too much time if you view them as a "nice to have", or something that is a good idea, but not critical to the feature. If that is the developers' mindset, they will incorrectly assess the scope and complexity of the work required, and end up under-estimating the time it will take to implement the feature.

On the other hand, if as company and development team you accept that unit tests are part of the feature, absolutely integral to delivery, you must include it in your assessment on the scope of work.

I outright forbid development teams at NML to add a unit test task on their boards. Putting a unit test task on a sprint board is telling me that as a developer you do not see unit testing as part of your job, but that you will do it if you have time since it is "a good idea". Absolutely not acceptable. It is in every way you can imagine part of your job, and you should never have to put a placeholder somewhere to remember to do it.

Clients do not care

Another objection often sighted is that clients do not care about unit tests and shouldn't have to pay additionally for them.

Again, complete nonsense. Clients do not care about unit tests for their software in the same way that we do not care about cows for our ice cream. We expect the ice cream to be good and tasty, and when it is not we don't blame the cows that produced the milk, we just have a bad experience and never buy from vendor again. Clients want quality software, and when they do not get what they expect, the do not blame the lack of unit tests, they blame the vendor.

If we are honest about what it really takes to implement quality software, of which unit tests form but one important aspect, then we can go a long way towards eliminating bad experiences.

As a software vendor, unit testing should not be an item on a catalog that the client can choose to have or not. The cost to both you as the vendor and the client it too high to even consider that. The cost is definitely more than what it would be if unit testing is just par for the course of development, and considered upfront.

As a vendor, if you have a client that willingly chooses fragile, unreliable software because it costs less up front and will be delivered faster, then you absolutely need to refer that client to another vendor. What they're really saying is that they want to pay less upfront, and will then, later on, insist that you did not deliver what was agreed on, as they do not have properly working software.

As a client, if you have a vendor giving you the option to skip unit tests, go somewhere else for your software. What they are really saying is that you might get your software fast and cheap, but then after you are committed, you will have to spend at least three times more than you budgeted for and wait twice as long to get the software you want.

Conclusion

Writing code is not an easy endeavor and developers, even the smartest ones, will make mistakes while they develop. The best way to reliably minimize mistakes is to write simple concise code that verifies the intended behavior repeatably, aka unit tests. Unit tests are not additional work or additional cost, they're integral to the development effort. Unit tests should be invisible as an item of work since they are part of the work.

That means that writing unit tests is your job in every way that writing code for a feature is.

All code changes introduce risk

For some reason, this concept is understood at face value but does not really translate into how we developers approach coding.

I have a couple of mantras I repeat over and over to my teams. Here are the top three:

- In virtually all contexts, readability trumps other considerations as it improves maintainability.

- All code changes introduce risk. Code such that when changes are needed, the least amount of code is touched.

- Do the simplest thing first and build from there. You can always make things more complex, but it is much harder to make complex things simpler.

The biggest side effect of the fact that coding is hard, is that it is also very easy to break things. Seemingly innocuous changes can have unforeseen consequences.

SOLID = managing risk

Every one of the five principles in SOLID reduces risk.

Single Responsibility

The more responsibility a module, class or method has, the more likely it is to be touched when change is required. It is just logical. If one piece of code has three behaviors and one of those behaviors needs changes, then all three behaviors are at risk of breaking.

Open/Close

Allowing implementation to be open for extension and closed for modification hugely reduces risk. If you can implement what is needed by extending an existing class, the only risk is to the new implementation. If you modify a class, especially a base or abstract class, you introduce risk to the class you are modifying, all inheritors and all other code that use it.

Liskov Substitution

This is a straight forward one: if you cannot reliably use a concrete class anywhere only the interface or abstract is known, you are introducing risk, as you have built-in the ability that your application will fail in specific conditions.

Interface Segregation

This aligns very closely with SRP and Liskov substitution, and following this principle reduces the risk for the same reasons as the aforementioned. Dependency Injection

As I pointed out in my article on unit testing, dependency injection allows you to reduce risk by covering almost all your implementation with unit tests.

Additionally, you can change the behavior of a component by registering a different implementation for a dependency. Your risk is less as no changes are needed in any code that uses the dependency. They reference the abstraction of the dependency, generally an interface.

Lastly, inversion of control removes the lifetime management of your dependencies outside of the scope of your implementation. This hugely reduces risk as you don't need every developer to know the intricacies building a dependency hierarchy in code, and more often than not, getting it wrong.

Architectural seams

Architectural seams are the border between distinct parts of your system. The data, logic, API, UI, etc are all areas within an application that serve a specific purpose. The purpose of an architectural seam is to provide separation by allowing cooperation without bleeding implementation across distinct areas.

They reduce your code risk by limiting the impact of changes to an area to extend only up to the seams.

Automapper on the seams

Automapper is incredibly useful for many reasons and reducing code risk is one of them. A well-architected system relies heavily upon models to transform and transfer data between layers at the architectural seams.

Using Automapper to perform this duty reduces your risk as changes to model structures are contained in mapping profiles. You might not even need to touch the mapping profile if the affected items do not need explicit mapping.

Source Control

Source control is a fantastic way to mitigate risk. They provide a history of changes, strategies to control code merging, etc. I do not know of any software company that does not use source control, however, there are some basic measures one can apply to enable your source control system to mitigate risk even further.

Branch policies

The master branch is your source of truth. It, therefore, needs to be carefully looked after and protected. Policies that limit how code is merged into the master branch is vital to reduce your risk. Various Git vendors, for example, provide different kinds of policies you can apply to protect your branches, and you need to find the right balance between flexibility and freedom, and allowed risk.

Commit message

There is nothing worse than trying to find a code change in history and having to troll through rubbish comments on commits that give no proper insight into the changes they contain. At NML, we follow the Karma commit message style, and since employing it as a standard, it has made a tremendous difference in our coding lives.

Pull Request

It may seem odd to some, but there are many companies still not using pull requests for controlling code merges. Pull requests are critical to reducing risk, as they allow other skilled eyes to peruse the suggested code changes and identify problems. Even just putting the obstacle in place that forces a developer to stop and hopefully think about what he or she is about to submit for scrutiny, already reduces risk.

Conclusion

There are many other areas within the development discipline that effects code risk, and an exhaustive list will make this article impossibly long. The items mentioned here were paramount in minimizing NML and our clients' exposure to code risk, and every company should, at a minimum, apply these to their contexts.

Unit testing as a concept is simple. Write code to test code. It seems, though, that everybody forgets one fact about coding: It is really hard!

I do not think it will be a stretch to say that most production codebases lack even a decent number of unit tests. Further, I am pretty sure a lot of those unit tests tend to be flaky and fragile and at times less than useful.

Plenty of articles from people way smarter than I exist on this topic, but I would like to give some insight into the way I encourage our developers to write unit tests at NML. We did a on this topic, but could not get to as much detail in the session as I would have liked. My previous article on unit tests pointed out the importance that unit tests should play in a developer's day-to-day job. This article gives outlines essentials on how to really get past those hurdles that make unit testing hard.

It is what I do, not how I do it

The first big mistake is to test how the target code works. The key insight is to test specific behavior in the code. The what.

The simplest strategy is to start at the top, and read through your code, identifying key entry points, and specific behavior.

I define behavior here as:

A single logical execution path from a public entry point up to where it returns or throws

public class Dodo

{

private IHabitat _habitat;

public Point Postion { get; }

public Dodo(IHabitat habitat, Position initialPosition)

{

_habitat = habitat

?? throw new ArgumentNullException(nameof(habitat));

Position = position;

}

public void Fly(Direction direction)

{

if (!CanStillRun(direction))

throw new EndOfTheLineException(direction);

Move(direction);

}

private bool CanStillRun(Direction direction)

{

return _habitat.HasSpaceLeft(direction, _position);

}

public void Move(Direction direction)

{

// Don't be silly! Dodos can't fly.

// They just sit around and do nothing.

}

}

The trivial example class above will have several specific behaviors, and if we test each of those, then we will get 100% code coverage, and we will have tests that catch issues not only when somebody changes the codebase, but also when they change expect behavior.

Reading from the top, there are declarations, which by themselves are not testable. Then we hit the first entry point, which of course, is the constructor. Since we are at an entry point, we have to start identifying behaviors. The very first line in the constructor is doing something, and in fact, thanks to C# syntactic sugar it is doing two somethings.

The first thing it does is check if the habitat variable is null, and if it is it throws an exception. That is one complete behavior because the throw statement will exit the entry point. You must, therefore, write a test to cover that.

public void ConstructorThrowsWhenHabitatIsNull()

{

Action construct = () => new Dodo(null, _initialPosition);

construct.Should().Throw<ArgumentNullException>("*habitat*");

}

The above tests use the FluentAssertions testing library, which is excellent, and I highly recommend it. Ignoring the syntax though, notice that the test is short and that it checks for only one behavior, the throw clause.

After we have written and run our test, we start at the same entry point and follow the alternate path from that line onwards. The next behavior is the assignment to the _habitat field. Execution will not stop there, so we continue reading. On the next line, we assign the Position property, which is still not exiting. Lastly, execution will hit the end brace (}) of the constructor, and the entry point is exited. That is a complete behavior and we, therefore, need a test.

public void Constructor()

{

var dodo = new Dodo(_mockHabitat.Object, _initialPosition);

dodo.Should().NotBeNull();

dodo.Position.Should().Be(_initialPosition);

}

The test above verifies that the constructor will run to completion and return a non-null instance of a Dodo, and also that any public items affected by the behavior are what we expect.

The key takeaway here is that to verify the success or failure of a behavior you have to not only check the result, but also any side-effects.

This article will be way too long if I continue with the example, but I think the above serves to show how to go about it. Using this method, the next behaviors are:

- The Fly method throws an exception when

_habitat.HasSpaceLeft returnsfalse - The Fly method executes and Position stays the same when

_habitat.HasSpaceLeftreturnstrue

Unit tests should verify behavior, not implementation

Interfacing Solidly

If the "D" from the SOLID principles (dependency injection) is not at the very core of every class you design and write, you will not be able to write successful unit tests. It is just that simple. Your unit tests will be fragile, they will be unreliable, and they will cause massive maintenance headaches as unrelated tests to the actual code change will break in a cascading fashion.

At NML I insist on a DI mindset. Effective dependency injection requires interfaces If you keep to injecting only interfaces to all your classes, you will have a pleasant time writing unit tests for them. Yes, you will have a lot of interfaces that potentially have only one concrete in your codebase, but it is worth it just from the benefits you gain from being able to test your code in complete isolation.

If you are using a dependency from a third-party library that does not provide an interface you can mock out, wrap it with the proxy pattern. Ideally, the only classes you should be unable to test in isolation should be proxies. Ideally... ;-)

Unit testing requires Inversion of Control

Mock and Verify

The previous two tips are integral to get the most out of libraries like Moq, RhinoMocks, and FakeItEasy. The important thing to remember about mocking dependencies is that you only mock what you need when you need it.

When you follow the "read from top to bottom" approach outlined above, you will be forced to have a mock version of all the target class dependencies by the time you completed all the behavior tests for the constructor. At that stage, they should not be configured to return or respond to anything that was not required in the constructor.

In our example above, I would only add a response configuration for the IHabitat.HasSpaceLeft method once I identify that it is needed to test the behavior(s) passing that method call.

Unfortunately, a lot of developers tackle writing unit tests a lot like the tackle writing classes. They try to think of as a whole and plan out fields and methods at the start.

Another important thing to remember is that you must verify that methods and properties on dependencies were called with the expected arguments. It is part of the behavior to call a dependency with specific data, which might undergo some kind of transformation.

Unit tests should be discovered by investigating behavior

Conclusion

These three essential concepts, along with accepting that unit testing is your job, will improve your code coverage, improve your code quality, and prevent you from chasing down elusive problems later that would have been caught earlier with good unit test coverage.

No results for those filters.